How to study in 2025

Introduction

I completed my bachelor's degree back in 2006 and later did a master's degree in 2012. Now, with the recent advancements in AI and machine learning and being a strong believer in:

We are limited by what we know.

So in order to architect solutions or do consultation work to cater to these advancements, I decided to read more and understand what all these are. I started reading/enrolled in online courses, and while doing these courses, I stumbled upon the fact that how things have changed and one can effectively study in 2025. Below is how I’m studying in 2025.

How to Get Focus

First, let's talk about the elephant in the room: how to get focus in the midst of continuous dopamine spikes provided by the endless scrolling of TikTok videos, Instagram reels, YouTube shorts, LinkedIn posts, etc.

One either needs to have self-control and discipline to overcome this first hurdle, or one can look for alternatives to stop this addiction.

Initially, I thought self-control would be easy and that I could manage it, but I failed miserably. I started looking for solutions and arrived at one after reading the book Make Time.

I began to apply the techniques and tools mentioned in the book by using a tool called Freedom. It was quite efficient in controlling this addiction but not enough to completely eradicate it. I then explored another app called Jomo (Joy of Missing Out), which offered stricter control, like using a QR code to pause or abort the focus session.

After using all these tools and techniques for close to three years, I came to the conclusion that the more one tries to escape the addiction, the harder it becomes. Instead of always blocking these apps or limiting the time spent on them, I found that having focus sessions using techniques like the Pomodoro Technique and the Two Minute Rule can be quite effective.

So, my final routine to stay focused involves creating a focused session using the Session app, which supports a pomodoro timer, and to enhance my focus, I play background music using the Endel app.

Taking Notes

Back from the school days, one thing that I feel is quite important is paying utmost attention to the lecture and taking notes, so most of the time while listening to the lecture, I jot down the important points.

And again, there is ample room available for getting distracted and going all over the place while listening to the lecture. The most efficient way to stay focused is by writing things down either on a piece of paper or on a tablet with a stylus. I personally feel typing is inferior unless one is a professional typist.

Between writing down on a piece of paper vs. capturing it on a tablet, I would say use the latter. The reason is that with tools like GoodNotes and Apple Notes, one can search text in handwritten notes and retrieve it anywhere.

In addition to jotting down notes, one should start recording audio for transcribing it for later use, which I will explain in a while.

Further Research

Back in the day, after the lecture, if one wished to deepen their understanding, they had to visit the library, do a Google search, filter out the results, find the ones that were more meaningful, and write them down or, if affordable, print them out.

Around exam time, one had to go through all the piles of paper notes back and forth, finding out where something was written down, unless you were gifted and could exactly remember where, how you had written things down, next to what, using what pen, etc.

If you were not a gifted person, you would have had a hard time just searching for the notes.

My workflow

Given that we are in 2025, I started looking for newer solutions to help in doing research and deepen the knowledge on what I'm studying.

Knowledge

The first thing I started doing is transcribing the notes. There are tools based on Whisper (Speech Recognition via Large-Scale Weak Supervision) out there which can efficiently transcribe voice recordings or video lectures.

After trying out different tools, I settled on Mac Whisper to transcribe YouTube videos and audio from any application or website.

Example: below is a gist of the transcribed notes from one of the recent video from Computerphile on the DeepSeek is a Game Changer for AI

New day, another piece of AI is announced.

That's right.

Why is this one so important?

We don't tend to do that many videos for the release of a new AI model just because there are a lot of them and lots of them are not that interesting.

But in the last few days, a model called DeepSeek has come out and a new model called DeepSeek R1 that are very interesting.

And I think actually are really threatening the kind of monopoly that certain companies have on this system.

And so let's talk about why that is and why we should be really excited.

Perhaps we should just step away for a minute and just for those people that have kind of not been paying attention, maybe this is the first time you've ever watched a video with me in it.

What is a large language model?

A large language model is a very, very big transformer based neural network that does next word prediction.

And so there's a lot of jargon there.

So how do we get through some of that jargon?

Oh, yeah.

Okay.

So a neural network is the sort of a standard for machine learning.

Things like convolutional neural networks are very, very popular in image based computer vision, AI for video, these kind of things and transformers since about 2017 have become you you

....

What's the point of transcribing the notes as is? I don't find any value in storing it as is; instead, as a next step, I use an LLM (Large Language Model) to convert it into the format that I wish using this prompt.

Which converts the transcribed notes into the following well structured format

## Introduction to Large Language Models

Perhaps we should just step away for a minute and just for those people that have kind of not been paying attention. Maybe this is the first time you've ever watched a video with me in it. What is a large language model?

A large language model is a very, very big Transformer-based neural network that does next word prediction. There's a lot of jargon there. So how do we get through some of that jargon?

## Understanding Neural Networks and Transformers

Oh, yeah, okay. So a neural network is the sort of a standard for machine learning. Things like convolutional neural networks are very, very popular in image-based computer vision, you know, AI for video, these kinds of things. Transformers, since about 2017, have become a really big thing in generative AI.

But what we didn't know really was how far you can push them or how good they can get. You could kind of, I suppose, split generative AI in two: you've got diffusion models which do image generation and you've got transformers that do text generation.

It's worth remembering that all modern AI of this kind is text generation rather than kind of going off and, you know, coming up with some fundamental concepts of your own and coming back.

## Training Large Language Models

Basically, the way that you normally train these models is you get a huge model that is too big for most of us to train. You get hundreds of thousands of GPUs, possibly. These are the graphics cards that train the model, and then you just batch through all of the text on the internet, learning how to predict the next word over and over again until you get so good at it that you can start regurgitating facts.

You can even start solving logic problems or mathematical problems and things like this. Over the last few years, since chat GPT was announced in 2022, there has been this kind of arms race among all the tech companies of who can build the biggest model, who can build the most performant model.

Generally speaking, their approach to doing this is to make them bigger, to make the data sets bigger, to make the models bigger, to make them more clever, and train them until you get that better performance.

....

Connect

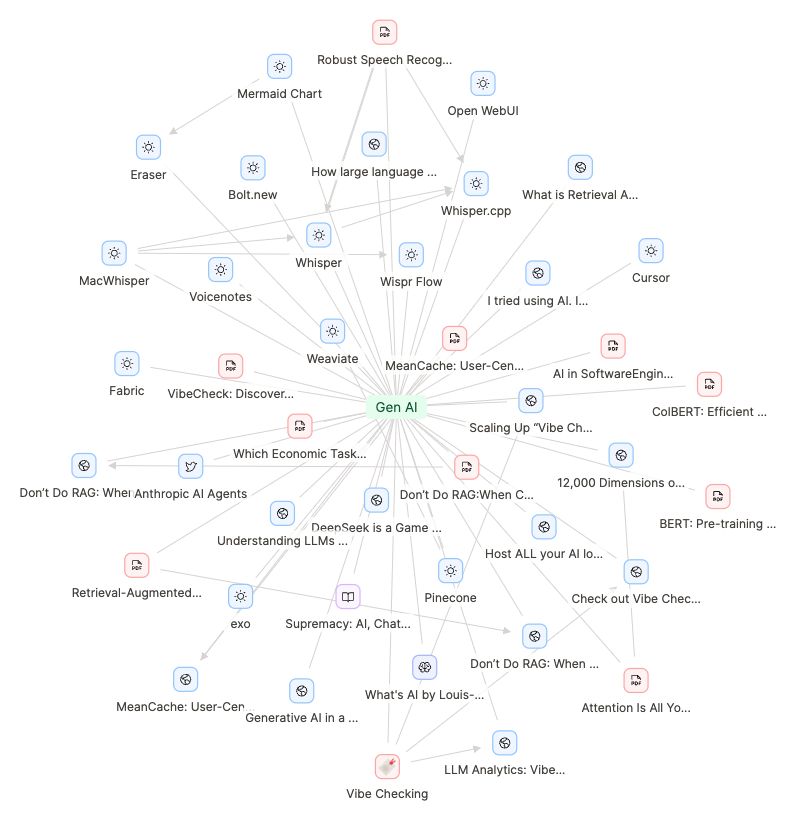

The next thing I started doing for the last 2 years is based on the concept called Second Brain I capture these notes into my PKM (Personal Knowledge Management) tool and connect them with other relevant things.

After trying out so many tools like Obsidian, Logseq, Roam Research, Notion, and Tana, I finally settled on Capacities (maybe I will write down an article about my choice in detail later).

Here is a glimpse of how my notes related to Generative AI are connected.

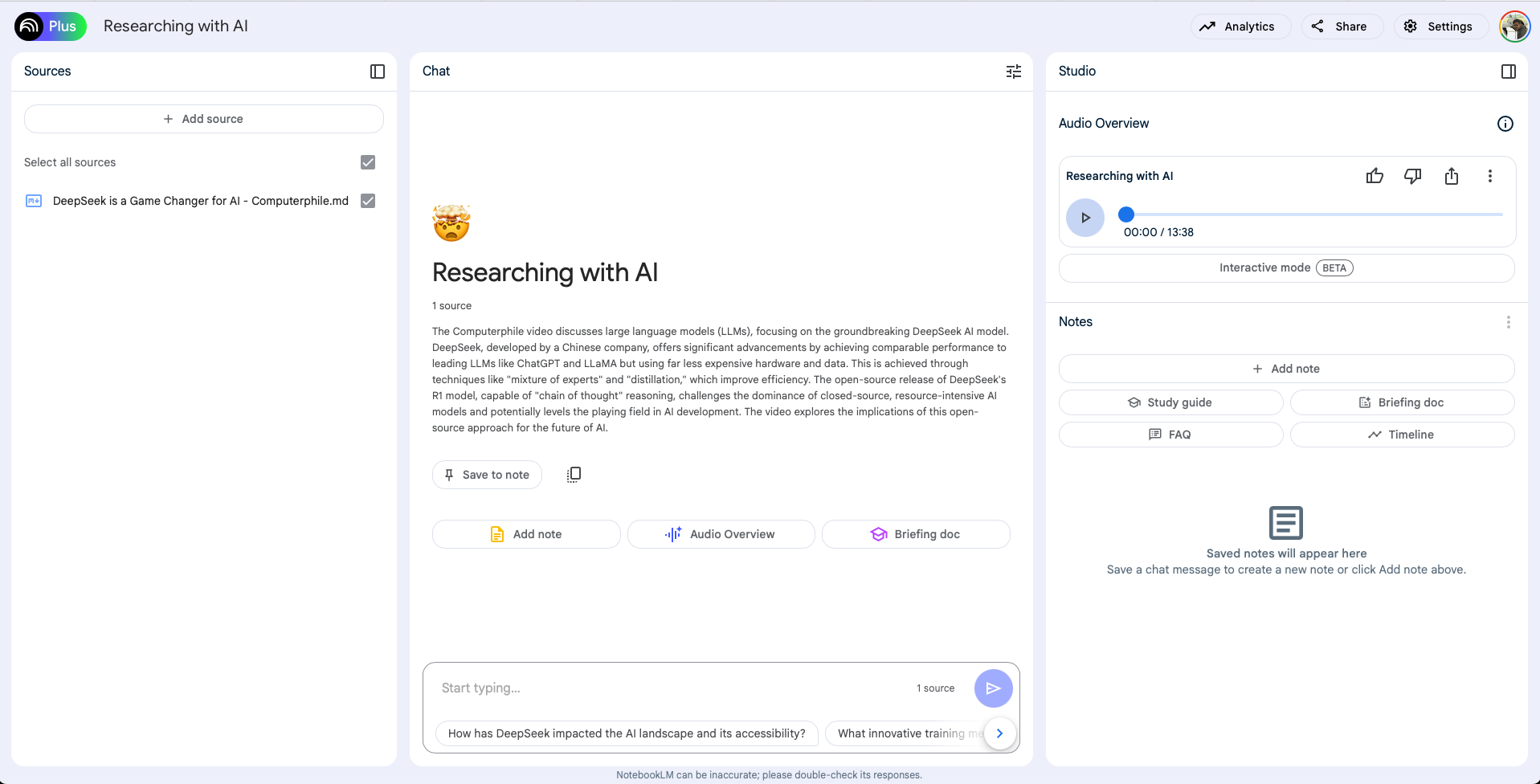

Research using Notebook LM

Similar to the above, once you convert all the lecture notes into the well structured format, upload them into NotebookLM and do further research on your own notes which will be free of hallucination and more interestingly you can do an audio overview of the notes by engaging in a deep dive discussion with AI research assistant.

I create a notebook on a topic and then add all my notes from the PKM tool as a source. You will not believe how soon you will have a pile of information sitting. Then I interact with my notebook with various prompts that I'm interested in, and finally will create an Audio Overview and will engage in a conversation with AI.

Below is the Audio Overview of the conversation based on the structured notes which I have created

Personally I feel this is mind blowing and very efficient, you can you try it once to appreciate the effectiveness on how it really helps to further deepen the knowledge and get to know about different perspectives on the subject.

Research Papers

As part of the course, I ended up reading a lot of research papers, and I am using Moonlight app to read them effectively and take notes.

Recall

Space repetition is an evidence-based learning technique using flashcards that helps to remember things and improve long-term memory.

That's fine, how does one create flashcards from the notes?

We can use LLM again here to convert all your notes into flashcards, which can be imported into tools like Anki App.

Example: below is the flashcard that I created out of the above-structured notes using the falsh-card prompt

Question,Answer

"What is a large language model?","A very big Transformer-based neural network that performs next word prediction."

"What are the two main types of generative AI models?","Diffusion models for image generation and transformers for text generation."

"What is the typical training process for large language models?","Using hundreds of thousands of GPUs to batch through text on the internet to predict the next word."

"What has been the trend among tech companies since ChatGPT was announced in 2022?","An arms race to build the biggest and most performant models."

"What is the difference in AI model openness between OpenAI and Meta?","OpenAI keeps models behind an API, while Meta releases models like LLaMA for free."

"What is the significance of Deep Seek in AI model training?","Deep Seek allows training with more limited hardware and reduces the amount of data needed for training."

"What is Deep Seek V3?","A flagship model similar to ChatGPT, trained on extensive text data with improved performance and lower training costs."

"What is the 'mixture of experts' method in AI models?","A technique where different parts of a network focus on specific tasks to improve efficiency."

"What is the process of 'distillation' in training AI models?","Using a large model to train a smaller model by asking questions and using its answers."

"What are 'mathematical savings' in the context of Deep Seek?","Techniques that reduce the number of computations needed for model inference, allowing for more efficient training."

"What is 'chain of thought' in AI models?","A method where the model writes down a step-by-step process to solve complex problems."

"What training methodology does Deep Seek R1 use?","It uses reinforcement learning by rewarding the model based on the correctness of its answers."

"What impact does Deep Seek have on the AI industry?","It challenges the reliance on expensive hardware, potentially leading to a more level playing field in AI development."

And every day I choose a topic and go over all the flashcards. This refreshes my memory and helps me recollect all the information and quote things, people, articles during the conversation.

Conclusion

I find these new tools to be fascinating and helpful in deepening knowledge on the subject. I hope this article has provided a new perspective on studying in 2025.